Professor Rob Califf, in a recent talk at the National Academies of Sciences, Engineering, and Medicine workshop on “real-world evidence” highlighted the failures of the current healthcare evidence generation system and presented a new approach to conducting randomised trials. You can watch the talk here.

The current system of evidence generation is based upon a mistaken focus on the need for precision in clinical trials which has been at the expense of getting reliable results from trials. This has resulted in the massively increasing cost of doing trials without a corresponding increase in quality. Following SOPs has become more important than the science of clinical investigation and one of the most damaging effects is the effort to record each data item more precisely in the mistaken notion that more reliable estimates of treatment effect will be derived for outcomes that matter.

This old system was built at a time when automation was not possible because data in an electronic format largely did not exist. Doctors wrote things on paper and then as time evolved this information was transferred to a computer. Then nurses and others flew around on airplanes at great expense to check that the information matched. This resulted in bizarre practices where nurses were printing out electronic health records to produce a written record so that a monitor can then check and make sure it matches what is in the trial’s electronic record. The cost of this monitoring is some hundreds of millions of dollars for some trials. The regulators devised a doctrine called GCP to enable these things to be done in a consistent fashion, but consistency doesn’t necessarily mean better, especially if one set of rules are applied to an entire array of different questions that need to be asked. Things were made even worse by “local experts” taking the doctrine and turning it into voluminous SOPs with multiple interpretations.

In a mistaken understanding of the theory and purpose of clinical trials, the regulated clinical trial industry has diverted enormous resources to an effort to increase precision and the academic trials community have adopted some of this thinking through the proliferation of GCP. Tearing down the entire structure would be counterproductive as people need structure to conduct these complex human experiments, but there is a need to change the focus from precision to reliability. For most clinical research, a focus on precision can actually detract from reliability because it limits, for example, the size of the study you can do or the number of endpoints that you have.

The result of all of this is that it is estimated that less than 15% of treatment guideline recommendations are supported by high-quality evidence.

In the second half of the talk, Califf went on to describe the four critical elements to build a new effective evidence generation system. These are:

- Create a reusable system for research embedded in practice.

- Apply the principle of Quality-by-Design.

- Use technology to automate repetitive tasks.

- Operate from basic principles.

Create a reusable system for research embedded in practice

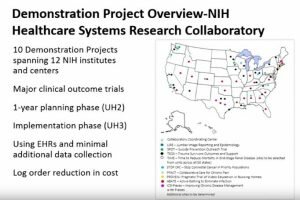

One of the major inefficiencies of current trials is the need to put in place the required infrastructure for each individual trial and which then gets discarded at the end of the study. Instead, networks of investigational sites linked to co-ordinating centres and utilising routine electronic data are being created for the purpose of doing trials much more efficiently. An example of such a reusable system is the NIH Research Collaboratory project, which has undertaken a number of demonstration trials at about 10% of the cost of doing traditional randomised trials.

Apply the principle of Quality-by-Design

The second concept is to apply the principle of Quality-by-Design, which I recently covered and can be found here. Califf pointed out that identifying the errors that matter for an individual trial requires careful thought instead of following a rigid set of SOPs. QbD can’t be implemented by recipe, but requires a need to understand the question the trial is asking, the clinical context and the needs of potential participants and future patients. The overall objective is to eliminate those errors that matter to the reliability of the result of the trial and the safety of trial participants.

The second concept is to apply the principle of Quality-by-Design, which I recently covered and can be found here. Califf pointed out that identifying the errors that matter for an individual trial requires careful thought instead of following a rigid set of SOPs. QbD can’t be implemented by recipe, but requires a need to understand the question the trial is asking, the clinical context and the needs of potential participants and future patients. The overall objective is to eliminate those errors that matter to the reliability of the result of the trial and the safety of trial participants.

Use technology to automate repetitive tasks

Califf used the example of Google Maps where the question is no longer, “do you have a map?”, but rather, “given that you have a map, how can you use that information?” In healthcare, continuous learning from the analysis of routine medical data is providing the platform for the more efficient conduct of research. The NIH Research Collaboratory is an example of such a learning healthcare system being used as a platform for research.

Califf used the example of Google Maps where the question is no longer, “do you have a map?”, but rather, “given that you have a map, how can you use that information?” In healthcare, continuous learning from the analysis of routine medical data is providing the platform for the more efficient conduct of research. The NIH Research Collaboratory is an example of such a learning healthcare system being used as a platform for research.

Operate from basic principles

Califf closed by pointing out that the new system won’t be built upon a rigid set of SOPs. New guidance will need to be developed, but instead of focusing on operational detail it would highlight those key principles in order to do a randomised trial well. This is exactly what we are planning to do at MoreTrials.

Leave a Reply